Table of contents

Do you want to increase sales and build even better relationships with your customers?

Artificial intelligence has entered the mainstream. We hear about it in the daily news, we use it every day on our devices. Have you ever wondered how it works? How do algorithms make decisions?

Turns out, it’s a field in its own right – it’s called explainable artificial intelligence, or XAI for short.

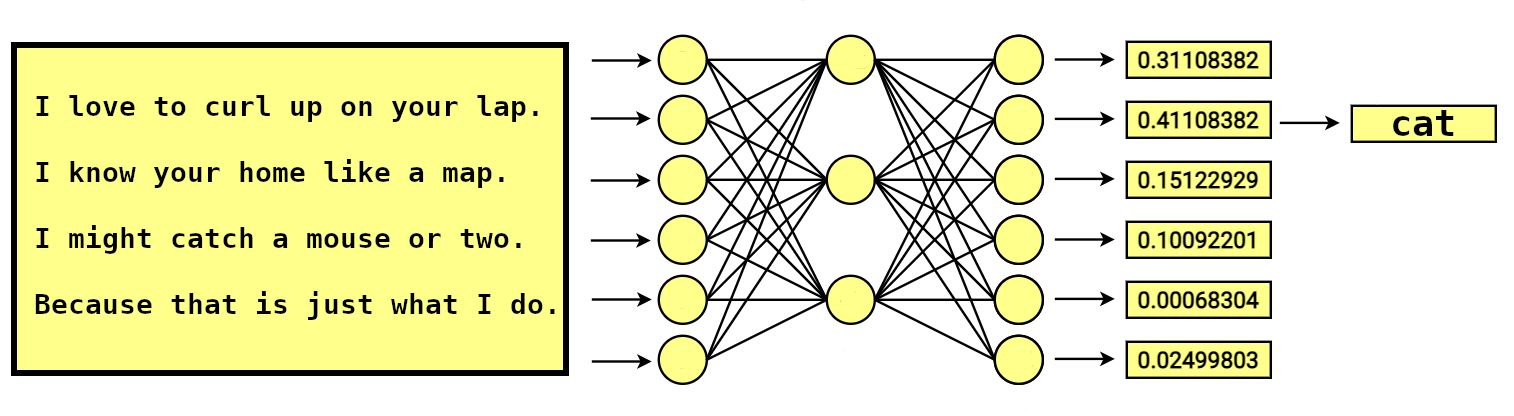

I love to curl up on your lap.

I know your home like a map.

I might catch a mouse or two.

Because that is just what I do.

What animal is this riddle about? And why?

It is very easy for us to answer questions like this. But let’s imagine that we put the same riddle to deep learning text classifier, it probably answers the cat. But why? Because the activation in the last layer for category ‘cat’ was highest. Not very convincing, is it?

Current Explanation:

This is a cat.

XAI Explanation:

This is a cat:

- kneading is an activity common to all cats

- cats are territorial animals

- cats’ hunting behavior

With the development of artificial intelligence and more and more complex models, increasingly, there are questions about why we achieved a particular outcome.

In this blog post, we would like to introduce the concept of explainable (or equivalently for us: interpretable) artificial intelligence.

Introduction

The explainability of artificial intelligence models is something that is being talked about more and more. We guess the main reason is that as we use AI solutions in more and more areas of our lives, and we want to understand what such solutions do. This is reflected in XAI’s position on the Gartner hype cycle chart for emerging technologies.

In 2019, XAI entered the peak of inflated expectations and is still in this area, which means that an increasing number of people are interested in this topic.

But it is important to remember that Explainable AI is not a new field. It is rather at least as old as early AI systems. Its roots go back to the 1970s and it is related to abductive reasoning in expert systems (including applications in medical diagnosis, complex multi-component design, and reasoning about the real world). Karry Farming in his article “Decision Theory Meets Explainable AI” cites work from the 1970s that described methods for explainable artificial intelligence, although the term was not used overtly.

As we know XAI concerns artificial intelligence in a broad sense. This field has been defined for several decades by machine learning algorithms, and in recent years deep learning, so the examples in the article will focus on XAI in machine learning (and we won’t touch the methods such as classic reasoning systems).

Okay, we already know that this is not a new concept, but quite popular in recent times, but what exactly is it?

How to define interpretability?

And here we run into an obstacle, because there is a problem with the formal definition of XAI. Of course there is a definition of interpretability in mathematical logic:

But the definition as given is not useful enough for us because it is too general. Fortunately, there are different ways to define XAI directly in the literature. For example:

Interpretability is the degree to which a human can understand the cause of a decision.

Or:

Interpretability is the degree to which a human can consistently predict the model’s result.

And they are somewhat similar to the Oxford English Dictionary definition of interpretability: ‘if something is interpretable, you can decide that it has a particular meaning and understand and explain it in this way’ (there is no definition for word explainable in Oxford Dictionary).

These definitions get us closer to our goal and are helpful in understanding what XAI is, but their weakness is that they are hard to quantify. For the purposes of this post, we’ll settle for these definitions, but if you’re interested more on defining explainability, see Christoph Molnar’s book. If you are also interested in trying to figure out how to quantify explainability we recommend a research paper co-authored by Edroners.

Why do we need interpretability?

The answer to this question definitely depends on our role, but we personally feel discomfort with the fact that that an increasing number of models are black boxes. In other words, we rely more and more on models with a complex structure that is hard to understand by humans.

Of course, people vary in their capacity to understand complex models. This depends on their level of knowledge and it is impossible to define clearly when (for example, by specifying the number of model parameters) understanding a model exceeds a person’s cognitive abilities.

Compared to machines, humans are also limited in their understanding of multidimensional relationships. According to psychologist George Miller people in short-term memory can store on average 7 ± 2 elements. Therefore the number of parameters or variables that a model can have in order to still be understood by a human is small.

According to Biecek and Burzykowski, in practice, for most people, this threshold is probably closer to 10 than to 100 parameters, depending on whether we are dealing with an outsider or an ML professional who has “eaten his teeth” analysing algorithm results. Very little when looking at the size of modern neural networks (for example the GPT-3‘s full version has a capacity of 175 billion machine learning parameters).

The question then becomes why not to use only interpretable models? Simply, because there is a correlation between the complexity of the model and its quality. Roughly speaking, models that we are able to understand give much poorer results than black boxes. This is illustrated by the graph below.

But returning to the question posed in the title of the paragraph, explainability is also needed for other reasons. For example, let’s look at one of the 22nd Annual Global CEO Survey findings:

What CEOs overwhelmingly ‘agree’ is that AI-based decisions need to be explainable in order to be trusted. More CEOs (84%) ‘agree’ with that statement than that AI is good for society (79%). Opening the algorithmic ‘black box’ is critical to AI’s going mainstream as the complexity and impact of its decisions grow (e.g. medical diagnoses, self-driving cars).

Let’s try to point out the important reasons for dealing with this topic:

- Security. Machine learning models take on real-world tasks. For example, they support medical diagnostics, drive autonomous vehicles, assist with courtroom verdicts, assist police patrols to name a few. This requires taking security measures and testing to make these models safe for society. And understanding such models is integral to ensuring their safety. In other words machine learning models can only be audited when they can be interpreted.

- Law regulations. You’ve probably heard of the General Data Protection Right (GDPR). This European Union regulation introduces a right to explanation. This is a right to be given an explanation for an output of the algorithm. Such a law was introduced earlier in France. Namely, French Digital Republic Act or loi numérique introduced an obligation to explain decisions made by public sector bodies about individuals. Similar regulations for credit score were introduced in the United States. US creditors are required to notify applicants who are denied credit with specific reasons for the detail. So when developing artificial intelligence solutions, we need to keep in mind that regulations in the country where our algorithm will operate may force interpretability on us. And going back to the Gartner, XAI is at the top of the Gartner Legal Tech Hype Cycle for 2021.

- Debugging. Interpretability is also a very useful tool for engineers developing AI solutions. It makes it easier to find potential bugs in the code or components that weaken the performance of the system. If the model reflects the biases embedded in the training data, XAI techniques may make it easier to capture them.

- Social acceptance. The Official Journal of the European Union (vol. 60, issued 31 August 2017) contains the statement: The acceptance and sustainable development and application of AI are linked to the ability to understand, monitor and certify the operation, actions and decisions of AI systems, including retrospectively. It summarizes very well the criteria that must be met for the general public to accept the development of artificial intelligence. This is very important because some people are afraid of artificial intelligence, as evidenced by the negative discussions about artificial intelligence that heated up online last year. You can read more social acceptance of AI in the paper Building Trust to AI Systems Through Explainability. Technical and Legal Perspectives co-authored by AVA team members.

- Human curiosity. People have an imperative to find meaning in the world around them. So human curiosity and the desire to learn are important reasons to take up this topic.

Of course, we should not always put a criterion of explainability on models in order for them to be used. If a model has no significant impact (take AI powered tamagotchi or Hot Wheels AI as examples) it’s not important for us to understand it thoroughly. In general, we should use a sense of ethics to answer the question of whether explainability is central to our model.

It is also worth remembering that an alternative to the legal or ethical requirement to use explainable artificial intelligence, might be the idea of envelopment proposed by Robbins. Robbins argues that artificial intelligence operating in a well-defined workspace (i.e. envelopment – physical and virtual microenvironments, which allow AI models to realize their function whilst preventing harm to humans) would not need explainability because its scope and mechanisms of operation would guarantee trustworthiness even if the details of its decisions were not fully transparent.

Of course, it’s not always feasible to create such an envelopment, so Robbins’ concept doesn’t preclude the XAI development at all, but merely complements it.

Pros and cons

The content of the post so far suggests that the XAI brings with it advantages alone. Indeed, there are many. Let’s list a few (some have already appeared in the text, for new concepts, links are provided to delve deeper into the topic):

- More trust and confidence in the model output

- Wider adoption of models in production because they will explain the reason of prediction to the stakeholders

- Help us to debug if there is any kind of bias in the data

- Compliance with law

- XAI facilitates Democratization of AI

- XAI is a step towards Responsible AI

But it is important to bear in mind that XAI is not a panacea for everything, and there are criticisms in addition to widespread satisfaction. Here are some of them:

- XAI is at best unnecessary, at worst harmful, and threatens to stifle innovation

- XAI favores human decisions over machine decisions

- XAI focuses on process over outcome

- Human decisions often cannot be easily explained: they may be based on intuition that is hard to put into words. Why should machines be required to meet a higher standard than humans?

- Some algorithms seem to be inherently difficult to explain

- There is no mathematical definition of artificial intelligence interpretability, so it is hard to measure the “degree of explainability”

- Explanatory models are themselves a black box

- Interpretability might enable people or programs to manipulate the system

It’s worth watching an interesting Oxford debate between machine learning greats that took place a few years ago: The Great AI Debate – NIPS2017 – Yann LeCun.

One can listen to the arguments of both camps: advocates and opponents, supported by interesting examples.

XAI methods

XAI can be embedded in a given machine learning algorithm (explainability by design) or be an “overbuild” independent of the model (via an external method, model agnostic or surrogate models).

The first type concerns so-called “white-box” or “glass-box” models. Only simple machine learning algorithms (regression models, generalised linear models, decision trees) allow their results to be explicable (interpretable) within the model.

The opposite is true for “black box” models, such as deep learning, being explained.

In the case of “black box” models, XAI relies on model agnostic methods (not specific for a given algorithm). The explainer only analyses the inputs and outputs to the black-box model, without looking at what happens internally in the algorithm. Other machine learning models summarise or approximate the result of the explained AI algorithm, but at the same time are much simpler to interpret (it is the “glass-box” model). In this category, we have global explanations, which show the general tendencies of the influence of variables on the average score of the machine learning model. E.g. they may show that a 10% higher income will translate into a 5% higher chance of getting a credit, on average. More flexible are the so-called local explanations, which provide explanations for a specific observation. For example, they may give the reason why a particular Mr. Smith did not get a loan.

Below is a list (not complete) of methods used to explain models.

Model Agnostic methods:

- Methods that summarise the effect of single variables into an algorithm output, as it were, in isolation from other variables:

— Partial Dependence Plot (PDP) aka ceteris paribus

— Individual Conditional Expectation (ICE)

— Accumulated Local Effects (ALE) Plot - Methods that are similar to the previous ones but, in addition, illustrate the combined impact of multiple variables:

— Feature Interaction - Methods that are based on the assumption that variables which – according to the XAI – are unimportant for the outcome of the explained machine learning model can, to a small extent, be randomly changed (without having a major impact on that outcome):

— Permutation Feature Importance - Methods that find an approximation to the model being explained, either at a global level (an explanation for the whole model) or at a local level (a separate explanation for each observation):

— Global Surrogate

— Local Surrogate (LIME) *

— Scoped Rules (Anchors) - Methods which, using an approach derived from game theory, describe the influence (contribution) of individual variables on the outcome of the algorithm under explanation, at the level of individual observations or in, some cases, global interpretation:

— Shapley Values

— SHAP (SHapley Additive exPlanations)

*We dedicated the usage of LIME in NLP our latest Webinar. If you are interested in the math background of it or want to see live examples of use, feel free to watch the recording:

Example based explanations

- Counterfactual explanations tell us how an instance has to change to significantly change its prediction. By creating counterfactual instances, we learn about how the model makes its predictions and can explain individual predictions. An example of this kind of explanation: You would get a loan if your earnings were 5% higher or if you repaid your current loan by £200 more per month.

- Adversarial examples are counterfactuals used to fool machine learning models. The emphasis is on flipping the prediction and not explaining it.

- Prototypes are a selection of representative instances from the data and criticisms are instances that are not well represented by those prototypes.

- Influential instances are the training data points that were the most influential for the parameters of a prediction model or the predictions themselves. Identifying and analysing influential instances helps to find problems with the data, debug the model and understand the model’s behavior better.

- k-nearest neighbors model: An interpretable machine learning model based on examples.

Neural networks interpretation

- Feature visualization (What features has the neural network learned?)

- Concepts (Which more abstract concepts has the neural network learned?)

- Feature attribution (How did each input contribute to a particular prediction?)

- Model distillation or ablation study (How can we explain a neural network with a simpler model?)

To become more familiar with the subject we recommend these two books:

And if you are interested in a more extensive list with references to the scientific literature, please see Appendix A in the article “Explainable Artificial Intelligence: a Systematic Review”.

XAI at edrone

Finally, we would like to briefly share our approach to explainable artificial intelligence. At edrone we develop Autonomous Voice Assistant for e-commerce. It is a solution that uses artificial intelligence methods, and we pay attention to explainability because we believe that it helps us to build better products.

As we wrote earlier XAI helps us debug our models, facilitates customer conversations, among other things. We also hold in-house seminars to discuss XAI related topics (revealing the cards a bit, this post is based on one of the seminars) and we working with the academia on XAI (here is our last paper on this topic https://link.springer.com/chapter/10.1007/978-3-030-77980-1_28).

You can expect more posts about XAI on our blog soon, so stay tuned 🙂

Grzegorz Knor

He began his data analysis career in 2007. Since 2017 involved in natural language processing. A physicist by training. Focus on ethics and understanding artificial intelligence.

Do you want to increase sales and build even better relationships with your customers?